1. Introduction to the Gemini API

The Gemini API provides access to Google’s family of multimodal large language models, including Gemini Ultra, Gemini Pro, and Gemini Nano, serving as the successor to LaMDA and PaLM 2. It offers developers a powerful platform for integrating advanced machine learning, natural language processing, and data analytics into their applications. The API supports various capabilities, including text generation, image generation (Imagen), video generation (Veo), music generation (Lyria), and embeddings, along with multimodal understanding of documents, images, video, and audio.

Key Highlights:

- Multimodal Capabilities: Gemini models can process and generate content across various modalities (text, images, audio, video, code, PDF).

- Flexible Access: Accessible through Google AI Studio (aistudio.google.com), Google Cloud Platform (GCP) with Vertex AI, or via third-party platforms.

- Developer-Friendly SDKs: Official Google GenAI SDKs are available for Python, JavaScript/TypeScript, Go, and Java, simplifying integration.

- OpenAI Compatibility: Gemini models can be accessed using OpenAI libraries by modifying a few lines of code, aiding migration for developers familiar with OpenAI’s ecosystem.

- Free Tier Availability: A generous free tier is available, particularly for prototyping and educational purposes, with specific rate limits.

2. Gemini Models Overview

The Gemini API offers a range of models optimized for different use cases, balancing performance, cost, and specific capabilities.

Current and Notable Models:

- Gemini 2.5 Pro: Google’s most powerful “thinking” model, excelling at complex reasoning, advanced coding, and multimodal understanding. It supports input of audio, images, video, text, and PDF, and outputs text. It has a massive input token limit of 1,048,576 tokens. “Thinking” is on by default and cannot be disabled.

- Gemini 2.5 Flash: Offers a strong balance of price and performance. Ideal for large-scale processing, low-latency, high-volume tasks requiring “thinking,” and agentic use cases. Supports multimodal inputs (text, images, video, audio) and outputs text. It also features a 1M token context window and configurable “thinking budgets.”

- Gemini 2.5 Flash-Lite: The smallest and most cost-effective model, designed for high-throughput and real-time, low-latency applications. It supports the same multimodal inputs as 2.5 Flash.

- Gemini 2.5 Flash Live / Native Audio Dialog: These models work with the Live API for low-latency bidirectional voice and video interactions, capable of processing and generating both text and audio.

- Gemini 2.5 Flash/Pro Preview TTS: Text-to-speech models optimized for various audio generation needs, from price-performant to powerful and natural outputs.

- Imagen 4 & 3: Google’s high-fidelity image generation models, capable of generating realistic and high-quality images from text prompts. All generated images include a SynthID watermark. Image generation is a paid-tier feature.

- Veo 3 & 2: State-of-the-art models for generating high-fidelity, 8-second 720p videos from text prompts, with Veo 3 featuring natively generated audio. Video generation is a paid-tier feature.

- Lyria RealTime: An experimental, state-of-the-art, real-time, streaming music generation model, allowing interactive creation and steering of instrumental music.

- Gemini Embedding (gemini-embedding-001): Offers text embedding models for words, phrases, sentences, and code. These foundational embeddings power NLP tasks like semantic search, classification, and clustering, providing context-aware results. It supports flexible output dimensionality (128-3072, with 768, 1536, or 3072 recommended) using Matryoshka Representation Learning (MRL) for efficient storage and computation.

- Gemma 3 & 3n: Lightweight, state-of-the-art open models built from the same technology as Gemini, with Gemma 3n optimized for on-device performance.

Model Versioning:

Models are available in stable, preview, or experimental versions.

- Latest stable: <model>-<generation>-<variation>, e.g., gemini-2.0-flash.

- Stable: <model>-<generation>-<variation>-<version>, e.g., gemini-2.0-flash-001.

- Preview: <model>-<generation>-<variation>-<version>, e.g., gemini-2.5-pro-preview-06-05.

- Experimental: <model>-<generation>-<variation>-<version>, e.g., gemini-2.0-pro-exp-02-05. Experimental models may not be suitable for production and have restrictive rate limits.

3. Accessing and Using the Gemini API

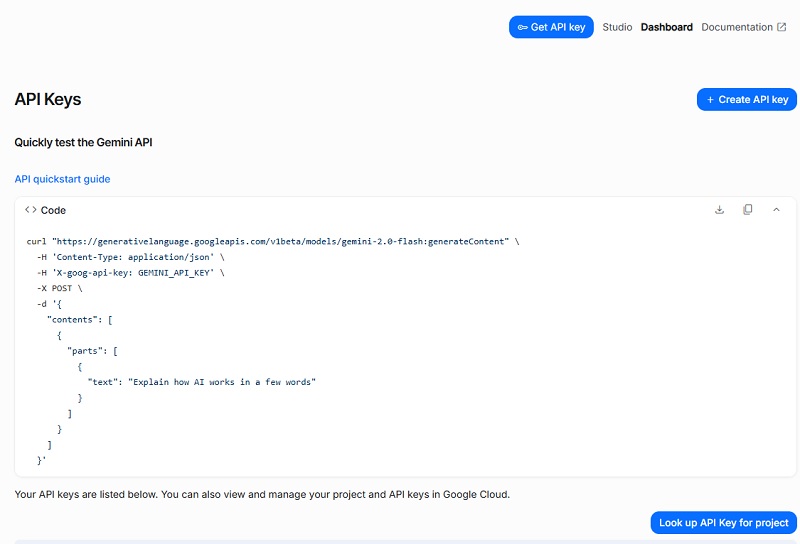

Developers can get started quickly with the Gemini API using Google AI Studio.

3.1 Obtaining an API Key

- Google AI Studio: The recommended and easiest way to get a free API key is by visiting aistudio.google.com/apikey and signing in with a Google account. “That single key unlocks both Pro and Flash endpoints.”

- Google Cloud Platform (GCP): Users can also create a Google Cloud account, create a project, enable the Gemini API in “APIs & Services,” and generate an API key from the “Credentials” section.

3.2 SDKs and Integration

- Google GenAI SDK: The official, production-ready libraries are available for Python (google-genai), JavaScript/TypeScript (@google/genai), Go (google.golang.org/genai), and Java (google-genai). These SDKs are in General Availability (GA) as of May 2025 and are recommended for the latest features and best performance.

- Legacy Libraries: Older libraries like google-generativeai (Python) and @google/generativeai (JavaScript) are on a deprecation path, with support ending by September 2025. Migration to the new SDKs is strongly recommended.

- API Key Management: API keys can be set as environment variables (GEMINI_API_KEY or GOOGLE_API_KEY) for automatic detection by SDKs, or explicitly provided in API calls.

- Security: API keys should be treated like passwords and never committed to source control or exposed client-side in production apps. Server-side calls are recommended, or ephemeral tokens for client-side Live API access. For web apps beyond prototyping, Firebase AI Logic is recommended for enhanced security features.

- OpenAI Compatibility: Developers can use existing OpenAI Python and JavaScript/TypeScript libraries with the Gemini API by setting the api_key to their Gemini key and updating the base_url to https://generativelanguage.googleapis.com/v1beta/openai/. This compatibility extends to features like streaming, function calling, image understanding, and structured output. Image generation via OpenAI compatibility is only available in the paid tier.

3.3 Core Capabilities & Features

- Text Generation: Standard text completion and chat interactions. Chat functionality includes chat.send_message and tracks conversation history.

- Multimodal Understanding: Gemini is “natively multimodal” and excels at understanding various file types (images, audio, PDF) and incorporating them into prompts. Files can be uploaded via client.files.upload or passed as raw bytes.

- Thinking Capabilities: Gemini 2.5 models have “thinking” enabled by default to “enhance response quality,” though this can increase response time and token usage. Developers can control the “thinking budget” (e.g., thinking_budget = 0 to disable thinking for Flash models). Reasoning cannot be turned off for 2.5 Pro models.

- Structured Output: Models can be constrained to respond with structured data formats like JSON, useful for automated processing.

- Long Context: Input millions of tokens to derive understanding from large unstructured data (images, videos, documents).

- Function Calling: Enables models to call external functions, making it easier to get structured data outputs.

- Live API: Facilitates low-latency bidirectional voice and video interactions.

- Batch Mode: Processes large volumes of requests asynchronously, offering a 50% cost reduction compared to interactive requests.

- Embeddings: Generates numerical representations of text, enabling semantic search, classification, and clustering. The embedContent method is used for this, and SEMANTIC_SIMILARITY is a common task type. cosine_similarity is a recommended metric for comparing embeddings.

3.4 Prompt Engineering

- Descriptive and Clear Prompts: Use meaningful keywords, modifiers, and specific terminology relevant to the desired output (e.g., for images: subject, context, style; for videos: subject, action, style, camera motion, composition, ambiance).

- Iteration: Refine prompts by adding more details incrementally to achieve desired results.

- Text in Images: Imagen models can generate text in images, with best results for short phrases (under 25 characters) and iterative regeneration.

- Audio Cues for Veo 3: Videos can include dialogue (quoted speech), sound effects (explicit descriptions), and ambient noise.

- Negative Prompts: Specify elements to exclude (e.g., negativePrompt = “cartoon, drawing, low quality” for Veo 3). Do not use instructive language like “no” or “don’t.”

- Parameterized Prompts: Create prompts with placeholders to allow users to input specific values (e.g., {logo_style}).

4. Pricing and Rate Limits

The Gemini API operates on a “free tier” for testing and a “paid tier” for higher rate limits and additional features. Google AI Studio usage is “completely free in all available countries.”

4.1 Free Tier vs. Paid Tier

- Free Tier: Offers lower rate limits for testing purposes. For example, Gemini 2.5 Pro has a free tier limit of 5 RPM, 250,000 TPM, and 100 RPD.

- Google has extended the “no-cost usage” for Gemini 2.5 Pro (60 requests/minute and 300K tokens/day) directly into every new API key created in Google AI Studio.

- This offer for 2.5 Pro has been extended through June 30, 2026, for verified students and accredited research labs.

- Startups in the “Google for Startups AI Fund” get 12 months of unlimited calls if they migrate workloads to Vertex AI.

- The free quota “will be reviewed quarterly,” with a likely “gradual taper rather than a hard cut-off” after Q4 2025.

- Paid Tier: Provides “higher rate limits, additional features, and different data handling.” Once billing is enabled, “all usage becomes billable—there is no longer a free usage allowance within the paid tier.”

- Pricing is typically per 1 million tokens for input and output, varying by model. For instance, Gemini 2.5 Pro costs $1.25/1M input tokens (prompts <= 200k tokens) and $10.00/1M output tokens.

- Batch Mode requests are 50% of the price of interactive requests.

4.2 Rate Limits and Tiers

Rate limits are measured by Requests Per Minute (RPM), Tokens Per Minute (TPM) for input, and Requests Per Day (RPD). Limits are applied per project.

- Free Tier: Specific limits apply per model (e.g., Gemini 2.5 Pro: 5 RPM, 250,000 TPM, 100 RPD).

- Tier 1: Requires a linked billing account. Significantly increased limits (e.g., Gemini 2.5 Pro: 150 RPM, 2,000,000 TPM, 10,000 RPD).

- Tier 2: Requires >$250 cumulative spend and 30 days since successful payment. Further increased limits (e.g., Gemini 2.5 Pro: 1,000 RPM, 5,000,000 TPM, 50,000 RPD).

- Tier 3: Requires >$1,000 cumulative spend and 30 days since successful payment. Highest limits (e.g., Gemini 2.5 Pro: 2,000 RPM, 8,000,000 TPM, No RPD limit).

4.3 Managing Costs and Usage

- Accidental Overages: Set hard client-side caps using max_tokens and enable “budget alerts” in Cloud Billing.

- Optimizing Free Tier: Strategies that reduce prompt size (e.g., structured system messages, JSON schema hints) can extend free usage, as Gemini 2.5 Pro is “priced internally by compute units; more tokens → more CU → more free quota burned.”

- Vertex AI Migration: Moving to Vertex AI can offer regional inference, private service connect, and scalable quotas, where free student/startup grants become per-project.

- Requesting Increases: Users can request additional per-project quota via Cloud Console, with a “70% approval rate according to reports in the Vertex AI community Slack as of April 2025” for academic or non-profit research.

5. Troubleshooting and Best Practices

5.1 Common Error Codes:

- 400 INVALID_ARGUMENT: Malformed request body (typo, missing field).

- 400 FAILED_PRECONDITION: Free tier not available in country, or billing not enabled.

- 403 PERMISSION_DENIED: Incorrect API key or lack of required permissions.

- 404 NOT_FOUND: Referenced resource (image, audio, video) not found.

- 429 RESOURCE_EXHAUSTED: Rate limit exceeded.

- 500 INTERNAL: Unexpected Google-side error, often due to excessively long input context.

- 503 UNAVAILABLE: Service temporarily overloaded or down.

- 504 DEADLINE_EXCEEDED: Prompt or context too large for processing within deadline.

5.2 Tips for Improving Model Output:

- Check Model Parameters: Ensure candidate count, temperature (0.0-1.0), and TopP (0.0-1.0) are within valid ranges.

- API Version and Model: Use the correct API version (/v1beta for Beta features) and supported models.

- Thinking Feature (2.5 models): Adjust or disable “thinking” if speed or cost is a priority.

- Safety Issues: Review prompts against safety filters. BlockedReason.OTHER indicates a violation of terms of service.

- Recitation/Repetitive Tokens: Make prompts unique, use higher temperature (>= 0.8), or add explicit instructions like “Don’t repeat yourself.”

- Structured Prompts: “For higher quality model outputs, explore writing more structured prompts.”

6. Future Outlook and Deprecation

- Gemini 2.5 Pro Rollout: Expected to replace Gemini 1.5 Pro in Google Workspace (Gmail Smart Replies, Docs “Draft with Gemini”) by July 2025, which should not affect API quotas.

- Fine-tuning: Parameter-efficient fine-tuning (LoRA/IA3) is “coming later in 2025,” expected to incur a “small tuning surcharge.” Prompt-only adapters are currently free.

- Deprecated Models: Gemini 1.5 Flash, Gemini 1.5 Flash-8B, and Gemini 1.5 Pro are deprecated with a deprecation date of September 2025. Developers are encouraged to migrate to newer models.

- Student Tier Re-verification: Students enjoying unlimited free access must “re-verify enrolment each academic year” by August 31, 2025, to avoid downgrade to the public tier.

Facebook

Facebook

LinkedIn

LinkedIn

X

X

Reddit

Reddit