Comet’s mission is centered on empowering practitioners and teams to achieve business value with AI. The company, founded in 2017, builds tools designed to help data scientists, engineers, and team leaders manage the entire Machine Learning (ML) or Large Language Model (LLM) lifecycle, spanning from training all the way through production.

Recognizing that AI presents the century’s biggest opportunity, Comet focuses on addressing fundamental pain points and reducing friction within the ML workflow, allowing practitioners to share their work, iterate, and build better models faster. Comet provides an end-to-end model evaluation platform that includes best-in-class LLM evaluations, experiment tracking, and production monitoring.

The Unified ML Development Stack

Comet aims to reduce the friction caused by disconnected technology stacks and help companies realize value from ML initiatives. It focuses on the three core elements of development: experiment management, model management, and production monitoring.

The platform is highly customizable, enabling data scientists and engineers to manage and optimize models across the entire ML lifecycle within a single user interface. This includes:

- Experiment Tracking: Systematically record, compare, and analyze ML training runs to accelerate development. Users can track and visualize necessary data, comparing code, hyperparameters, metrics, predictions, dependencies, and system metrics.

- Model Management and Registry: Centralize and organize models, enabling versioning, tracking, and seamless deployment across team workflows. This allows users to save model versions, manage deployment stages with tags and webhooks, and track lineage from the model binary through the training datasets.

- ML Model Production Monitoring: Proactively analyze production datasets against training benchmarks. Monitoring features help ensure regulatory compliance and address issues like data drift before they impact end-user experience. Advanced Enterprise features include data drift detection, feature distribution analysis, custom metrics via SQL, and alerts.

Comet offers extensive framework support for ML Experiment Management, integrating with popular tools such as Pytorch, Pytorch Lightning, Hugging Face, Keras, TensorFlow, Scikit-learn, and XGBoost.

Opik: Open Source LLM Evaluation

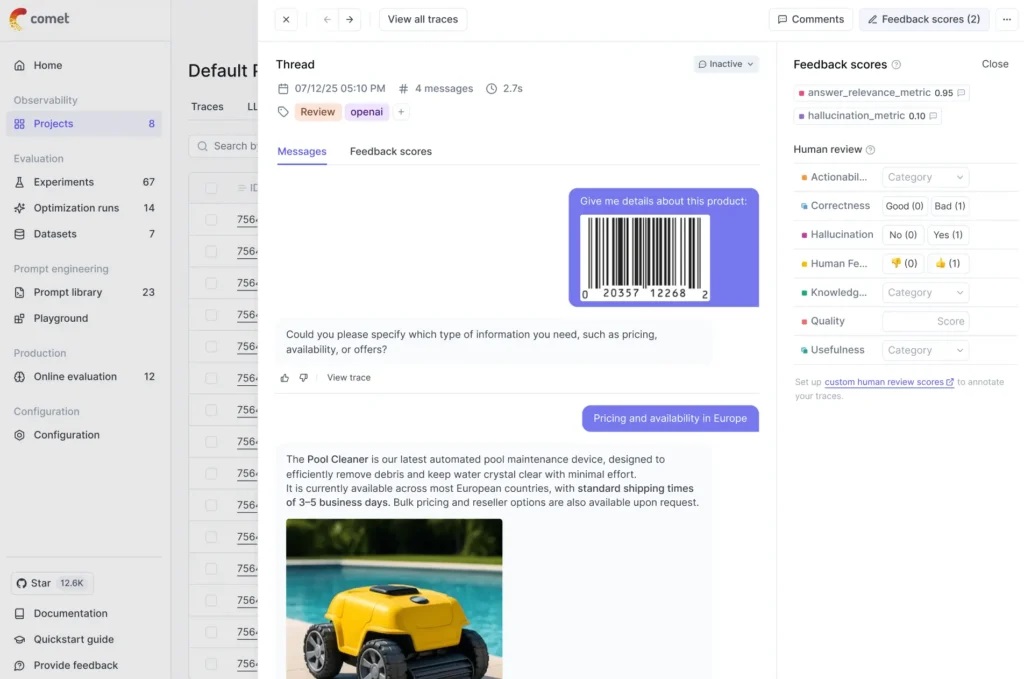

A key component of Comet’s end-to-end evaluation platform is Opik, the open source LLM evaluation platform. Opik helps AI developers build, log, test, and iterate at each stage of the development cycle to confidently scale LLM-powered applications and AI agents from prototype to production.

Opik is a true open-source project, with its full evaluation feature set available free in the source code for local or self-hosted deployment. It is also powered by Comet’s enterprise-grade infrastructure, ensuring reliable performance at scale.

Opik provides comprehensive LLM observability, helping users understand what is happening across complex Generative AI systems. Key capabilities include:

- Logging Traces and Spans: Log traces to capture and organize the application’s LLM calls, providing total observability to visualize context retrieval, tool selection, and user feedback. Traces appear almost instantly, even at high volumes.

- Evaluation and Debugging: Users can debug with human feedback, annotating traces to label what is working or not. It allows scaling testing and scoring with automated LLM evaluation metrics for issues like hallucination, context precision, and relevance. Built-in LLM judges can be consulted for complex issues.

- Agent Optimization: Opik automatically generates and tests prompts for steps in an agentic system, recommending top performers based on desired metrics. This auto optimization runs four powerful optimizers: Few-shot Bayesian, MIPRO, evolutionary, and LLM-powered MetaPrompt.

- Guardrails and Testing: The platform includes guardrails to screen user inputs and LLM outputs, detecting and redacting unwanted content such as PII and competitor mentions. Developers can also establish reliable performance baselines using Opik’s LLM unit tests, built on PyTest, to evaluate the entire LLM pipeline on every deploy.

- Integrations: Opik is compatible with any LLM and offers direct integrations with tools like LangChain, LlamaIndex, OpenAI, LiteLLM, and DSPy.

Adoption and Accessibility

Comet’s platform is utilized by hundreds of organizations, including academic teams, startups, and enterprise companies. An estimated 70% of people in North America have interacted with an ML or deep learning model that was trained using the Comet platform. The platform is trusted by innovative teams, with users noting that Comet has brought significant value and improved efficiency to their ML workflows, allowing them to speed up research cycles and reliably reproduce projects.

Comet offers flexible pricing plans designed for different team sizes, all of which include LLM evaluation.

- Comet Free: Ideal for individuals, offering unlimited LLM team members, 25k spans per month, and a generous free tier that does not require a credit card to sign up. The Free plan includes core experiment tracking features with fair usage limits and 100GB data storage.

- Comet Pro: Designed for growing teams, supporting up to 10 users and 1,500 training hours.

- Comet Enterprise: Tailored for large organizations requiring enhanced security and compliance (including SOC 2, HIPAA, and GDPR compliance). This plan offers unlimited usage, advanced monitoring features, and flexible deployment options, including cloud-based, on-premises, and fully managed solutions.

Additionally, Comet offers a free Pro plan for academic users, including researchers, students, and educators. Comet, headquartered in New York City with offices in Tel Aviv, supports a global, remote-first workforce.

Facebook

Facebook

LinkedIn

LinkedIn

X

X

Reddit

Reddit