Artificial intelligence is rapidly evolving beyond simple question-and-answer systems to autonomous entities capable of planning, decision-making, and real-world task execution. This shift is powered by frameworks known as Agent Development Kits (ADKs), which streamline the creation and deployment of sophisticated AI agents. This article explores the concept of ADKs, with a focus on their implementation in TypeScript, and delves into the powerful application of Agentic Retrieval-Augmented Generation (RAG).

What are AI Agents and Agent Development Kits?

AI agents are autonomous systems designed to perform complex tasks, leveraging advanced machine learning models and orchestration frameworks. Initially seen as chatbots, these agents are now evolving into powerful workflow orchestrators that can plan, make decisions, and collaborate in multi-agent operations. Key concepts of AI agents include autonomy (performing tasks without constant human intervention), tool integration (utilizing helper functions and external APIs), complex workflow orchestration, and inter-agent communication.

An Agent Development Kit (ADK) is a flexible and modular framework for developing and deploying these AI agents. It aims to make agent development more akin to traditional software development, simplifying the creation, deployment, and orchestration of agentic architectures, from simple tasks to complex workflows. Google offers its own ADK, optimized for its ecosystem but designed to be model-agnostic and deployment-agnostic, with compatibility for other frameworks. This kit helps developers build autonomous agents that can plan, decide, act, and learn.

ADK for TypeScript: Empowering Developers with Type Safety and Modularity

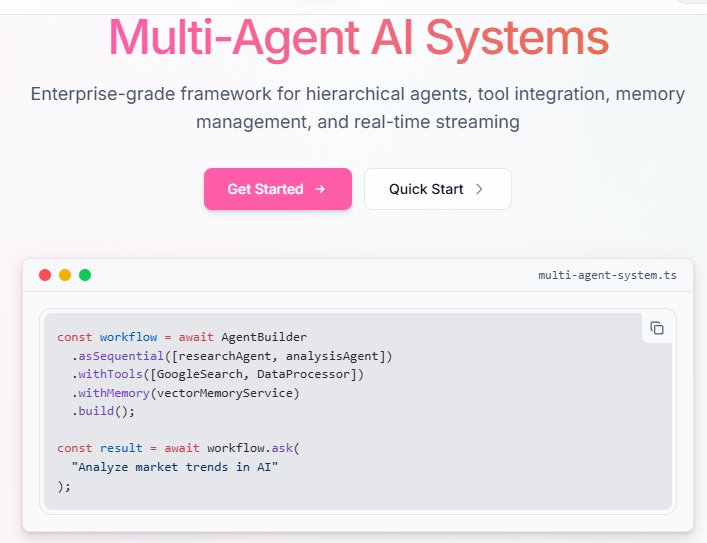

Inspired by Google’s Python ADK, IQ AI’s Agent Development Kit (ADK) for TypeScript reimagines the architecture for the TypeScript ecosystem. Launched on July 17, 2025, it is an open-source framework designed to facilitate the development, orchestration, and deployment of intelligent AI agents. It provides a TypeScript-first toolkit with a strong emphasis on type safety and modularity. This focus helps reduce common development errors, improves code readability, and streamlines the development process.

Key Features of ADK for TypeScript:

- AgentBuilder API: This fluent interface simplifies agent creation by minimizing boilerplate code, allowing for quick setup of agents, from simple instances to complex multi-agent workflows.

- Multi-LLM Compatibility: The framework offers seamless compatibility with a wide range of large language models (LLMs) like OpenAI’s GPT series, Google Gemini, Anthropic Claude, and Mistral, through a unified interface, providing flexibility in model selection.

- Modular Architecture and Tool Integration: ADK for TypeScript features a modular and flexible architecture that enables developers to compose agents and integrate various tools, including ready-to-use or custom-built functionalities. Tool integration is facilitated by the Model Context Protocol (MCP), supporting advanced tooling and automatic schema generation.

- Memory Management and Session Handling: It includes robust features for stateful memory and session management, allowing agents to maintain long-term context and state across interactions. This is crucial for building AI assistants and autonomous agents requiring persistent knowledge.

- Tracing and Evaluation: The kit incorporates OpenTelemetry support for tracing and performance evaluation, enabling developers to debug agent behavior, monitor performance metrics, and gain insights into complex multi-agent systems.

- Multi-Agent Workflows: ADK for TypeScript provides comprehensive support for orchestrating complex multi-agent workflows, allowing coordination of agent teams to handle intricate tasks. It supports various orchestration logics, including sequential, parallel, and LLM-driven routing.

- On-Chain Capabilities: For Web3 developers, ADK for TypeScript offers native support for integrating with blockchain and decentralized finance (DeFi) applications. This enables AI agents to interact directly with on-chain data and protocols, performing actions like analyzing DeFi positions, executing token swaps, managing tokenized agents, and interacting with smart contracts.

- Deployment and Scalability: Engineered for production readiness, ADK for TypeScript supports various deployment options, including Docker, making agents cloud-ready and easy to containerize. Built-in features like session management, persistent memory, and OpenTelemetry ensure agents scale from prototypes to full-scale production applications reliably.

Agentic Retrieval-Augmented Generation (RAG) with TypeScript

Retrieval-Augmented Generation (RAG) enhances LLM performance by connecting them with external knowledge bases, leading to more relevant and higher-quality responses. When AI agents are integrated into this process, it becomes Agentic RAG, which increases adaptability and accuracy compared to traditional RAG systems. Agentic RAG allows LLMs to conduct information retrieval from multiple sources and handle more complex workflows, involving intelligent agents for retrieval, generation, critique, and orchestration. This contrasts with traditional RAG’s limited decision-making and static context.

Building an Agentic RAG system in TypeScript using the Langbase SDK provides a practical example of how these concepts come together:

- Project Setup: Initialize a Node.js project, create necessary TypeScript files (e.g.,

create-memory.ts,upload-docs.ts,create-pipe.ts,agents.ts,index.ts), and installlangbaseanddotenvdependencies. - API Key Configuration: Obtain a Langbase API key from their studio and an LLM API key (e.g., OpenAI). These keys are stored in an

.envfile. - Create Agentic AI Memory: Use

langbase.memories.createto set up a serverless AI memory, which includes a vector store by default. This memory is designed to acquire, process, retain, and retrieve information seamlessly, dynamically attaching private data to LLMs and reducing hallucinations. - Add Documents to AI Memory: Upload documents (e.g., PDF, markdown files) to the created memory using

langbase.memories.documents.upload. The system automatically chunks the documents, sends them to the chosen embedding model (e.g., OpenAI) for embedding, and stores these embeddings in its internal vector store for efficient semantic search. Metadata can be added to documents for better organization and filtering. - Perform RAG Retrieval: When a query is received, the system creates embeddings for the query and compares them with the document embeddings in memory to find semantically similar chunks. The

langbase.memories.retrievemethod is used to fetch the most relevant chunks. This is not a keyword search but a semantic search, finding data whose meaning is similar to the query. - Create Support Pipe Agent: A “Pipe agent” in Langbase is a serverless AI agent with unified APIs for LLMs. This agent will be configured with a system prompt to act as a helpful AI assistant.

- Generate RAG Responses: The retrieved chunks are then provided as context to the Pipe agent’s LLM, along with the user’s query. The LLM uses this augmented context to generate a precise, contextually accurate response. Citations back to the source documents can be included in the response by leveraging metadata added during document upload.

Designing Effective Workflows and Multi-Agent Systems

The true power of AI agents comes from combining different types of agents and orchestrating complex workflows. Google’s ADK categorizes agents into:

- LLM Agents: Utilize LLMs as their core engine for natural language understanding, reasoning, planning, and dynamic decision-making.

- Workflow Agents: Control the execution flow of other agents in predefined, deterministic patterns (sequential, parallel, or loop) without using an LLM for flow control.

- Custom Agents: Extend the

BaseAgentdirectly to implement unique operational logic or specialized integrations.

Effective workflow design is critical. LlamaIndex, for instance, uses an event-based “Workflows” system in both its Python and TypeScript frameworks to orchestrate agentic execution. This system connects a series of execution steps via events, allowing for chains, branches, loops, fan-outs, and collections. It embraces a hybrid pattern, blending autonomy and structure, where decisions about control flow can be made by LLMs or traditional imperative programming.

The “hybrid pattern” for agent design offers several benefits:

- Better reliability through predictable execution paths for critical operations.

- Clearer error handling and recovery mechanisms.

- Easier debugging and tracing of agent behavior.

- Improved performance through optimized pathways for known scenarios.

- Maintaining autonomy where it provides value.

- First-class support for human oversight via human-in-the-loop interventions.

This approach recommends defining structure for well-understood processes, critical business logic, error-prone operations (allowing human-in-the-loop intervention), and structured output requirements. Conversely, autonomy is beneficial for unstructured inputs (like contracts, invoices, customer service queries), flexible rule sets (handling edge cases), and novel situations where dynamic adaptation is needed.

Community and Open Source

Many ADK frameworks, including IQ AI’s ADK for TypeScript, are open-source projects. This fosters a community-driven development model, encouraging collaboration, contributions, and discussions among developers. The code being available on platforms like GitHub promotes broad adoption and modification, often under licenses like the MIT License. This open approach helps build a vibrant ecosystem around these toolkits.

Conclusion

Agent Development Kits are shaping the future of AI by providing the necessary tools to build intelligent, autonomous, and scalable systems. Whether through type-safe frameworks like IQ AI’s ADK for TypeScript or platforms like Langbase that simplify Agentic RAG implementation, developers are increasingly empowered to move beyond simple chatbots and create sophisticated AI applications that can interact with real-world data, perform complex tasks, and integrate seamlessly into various environments, including the Web3 ecosystem. The blend of structured workflows with LLM-driven autonomy is proving to be a pragmatic and effective approach for developing robust and reliable AI agents.

Facebook

Facebook

LinkedIn

LinkedIn

X

X

Reddit

Reddit